Keeping common scripts in GitLab CI

With this post, I’d like to start a series of CI-related tips, targeted mostly at GitLab, since that’s my go-to tool for things CI/CD-related. I’m sure most of them could be easily applied to other CI systems, though.

While GitLab does a great job at many things (repository hosting and related stuff, like MRs, pipelines, issue boards, etc.), it sometimes lacks more specialized features in some areas. With heavy usage of pipelines in my projects, I often had to look for workarounds and smart tricks. So I’d like to share some of them now, based on my experiences.

The issue

An example CI definition could look something like this:

stages:

- test

- build

- deploy

test:

image: golang:1.11

stage: test

script:

- go test ./...

- go vet ./...

build:

image: golang:1.11

stage: build

script:

- go build -o ./bin/my-app ./cmd/my-app

artifacts:

paths:

- bin/

deploy:

stage: deploy

script:

- ... # upload bin/ somewhere

I have some issues with using bare bash commands like this. See what happens when you need to change something in one of your jobs:

- You need to push a new commit in the application repository, triggering full pipeline for the project.

- Your changes won’t propagate to other branches, unless your commit from

masteris merged by other developers. - Your changes will affect only this one repository. If you maintain more similar projects, you need to push the changes to all of them.

Common scripts repository

One of the things I missed when starting out with GitLab pipelines was a place for common scripts, which could be used by multiple repositories. At that time include was not yet available, but even now it hardly solves the issue for me.

Reusing parts of the YAML definition is one thing, but the other is reusing the standard scripts across all of your projects. I’d prefer things like “build app”, “package app”, “deploy app” to be one-liners in the pipeline.

I also feel that this fits nicely into the DevOps mindset: ops prepare the scripts, which are easy to use by developers. The scripts are developed using the same good practices as the application code itself: with code reviews and their own pipeline including unit tests and static checks.

I usually end up with a mix of bash and Python scripts. Bash works well for simple scripts, but for more complex stuff, going with Python (or other scripting language you like) should save you some future headaches. Python is usually easier to read by other people too, but keep in mind it may not be included in every docker image you’ll be using in your jobs.

Implementation

The idea is pretty simple: fetch the scripts repository every time a job is run.

This should be as simple as adding global settings in your .gitlab-ci.yml:

variables:

SCRIPTS_REPO: https://gitlab.com/threedotslabs/ci-scripts

before_script:

- export SCRIPTS_DIR=$(mktemp -d)

- git clone -q --depth 1 "$SCRIPTS_REPO" "$SCRIPTS_DIR"

You could also move the variables to the project’s CI/CD variables. Or include this config from

another location. Or use your own docker images with the scripts repository baked in and run git pull in it.

The basic idea stays the same.

Note

Passing --depth 1 option results in a shallow clone of the repository, i.e. truncated to the most

recent commit from the master branch. Thanks to this, the overhead for each job is minimal.

Learn more in docs.

Example script

Let’s say you have a bunch of Golang applications and you’d prefer to have a consistent build process for all of them.

Why would you want it, when a simple go build should be enough? For example, you might want to bake the version number

into every one of your services: it’s usually useful to know which version you’re running! Putting

that into a common script ensures that none of your applications will skip this step.

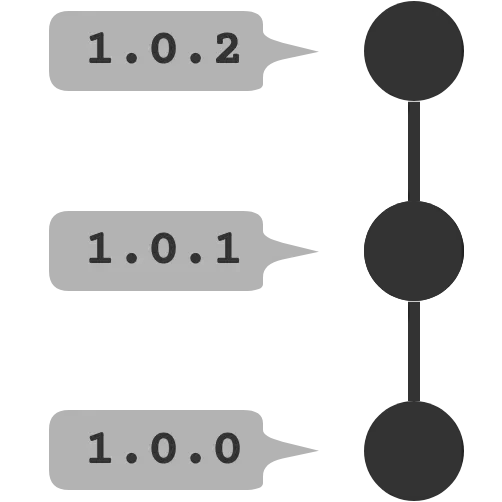

In the next post, I’ll show you how to generate semver versions for commits. For now, we’ll stick with git commit hash as our version number.

#!/bin/bash

# Build generic golang application.

#

# Example:

# build-go cmd/server example-server pkg.version.Version

set -e

if [ "$#" -ne 3 ]; then

echo "Usage: $0 <package> <target_binary> <version_var>"

exit 1

fi

readonly package="$1"

readonly target_binary="$2"

readonly version_var="$3"

readonly bin_dir="$CI_PROJECT_DIR/bin/"

mkdir -p "$bin_dir"

go build -ldflags="-X $version_var=$CI_COMMIT_SHA" -o "$bin_dir/$target_binary" "$package"

Note

Don’t forget the set -e part or your bash scripts may silently fail and cause some hard to find bugs.

This script expects that the resulting binary will be kept in the bin directory. It’s just another

thing you might want to have consistent across repositories.

Note

Note that I used CI_ variables provided by GitLab.

While they make the scripts really simple to run, it comes with the cost of more complicated

testing, since you need to set up some environment variables before running scripts locally.

If you prefer more explicit approach, just pass those variables as arguments to your script.

This script should be put in your scripts repository.

Don’t forget to set the execute permissions (chmod +x).

The last thing you need to do is use the script in your application repository:

build:

image: golang:1.11

stage: build

script:

- $SCRIPTS_DIR/golang/build . example-server main.Version

artifacts:

paths:

- bin/

And this is it! Your scripts should now always be up-to-date across your repositories. Of course this setup won’t make much sense if you’re using just one repository, so keep that in mind. Don’t overcomplicate it, if you can.

Authentication

If you’re using a private repository for your scripts, you’ll need to add some form of authentication to clone it. You can do this with either Deploy tokens or SSH deploy keys.

Using deploy tokens is a bit easier, so let’s see it in action.

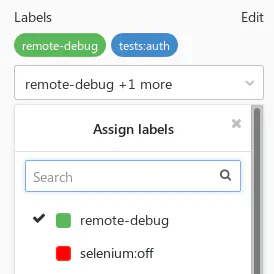

- In the scripts repository: generate new deploy token. You’ll find it in the

Settings -> Repository -> Deploy tokenssection. - In the application repository: add the generated user and token as new variables called

SCRIPTS_USERandSCRIPTS_TOKEN. You can do this in theSettings -> CI / CD -> Variablessection. - Modify the

SCRIPTS_REPOvariable in your application CI definition:

variables:

SCRIPTS_REPO: https://$SCRIPTS_USER:$SCRIPTS_TOKEN@gitlab.com/threedotslabs/ci-scripts

Note

Managing secrets across multiple projects is easier if you add variables at the group level.

Testing the changes

If you’ve worked with multiple repositories before, you should already know that it adds some

synchronization overhead. For example, pushing a broken script to the master branch could break builds

in all your projects. That’s why code reviews and unit tests are very much welcome.

If unit testing the scripts is hard or just not possible, you could try testing them on branches

first, before merging to master. You would just need to checkout to the chosen branch after cloning.

What’s cool, you can retry the failed job this way just after pushing changes to the script, without running the

complete pipeline again.

What about Makefiles?

Moving all bash commands to Makefile can tidy up your CI definition and allows developers to run the

same checks and tests that will be run in the pipeline. For example, you could introduce make test

target that will run unit tests, make lint for static checks and so on.

Sadly, this will mean duplicating the Makefile across all your repositories and it doesn’t really

solve the issues mentioned above. Makefiles also have their own quirks, so better make sure you know

what you’re doing or you might run into unexpected issues (e.g. silently passing on errors).

Wrapping up

While the script we’ve used is really trivial, this shows how this integration can be used for keeping all CI-related scripts in one place. I’ll show more advanced examples in the next posts, so stay tuned!

If you have any questions or you’d like to share other ideas for similar workflow, hit me on Twitter.