Robust gRPC communication on Google Cloud Run (but not only!)

Welcome in the third article form the series covering how to build business-oriented applications in Go! In this series, we want to show you how to build applications that are easy to develop, maintain, and fun to work in the long term.

In this week’s article I will describe how you can build robust, internal communication between your services using gRPC. I will also cover some extra configuration required to set up authentication and TLS for the Cloud Run.

Why gRPC?

Let’s imagine a story, that is true for many companies:

Meet Dave. Dave is working in a company that spent about 2 years on building their product from scratch. During this time, they were pretty successful in finding thousands of customers, who wanted to use their product. They started to develop this application during the biggest “boom” for microservices. It was an obvious choice for them to use that kind of architecture. Currently, they have more than 50 microservices using HTTP calls to communicate with each other.

Of course, Dave’s company didn’t do everything perfectly. The biggest pain is that currently all engineers are afraid to change anything in HTTP contracts. It is easy to make some changes that are not compatible or not returning valid data. It’s not rare that the entire application is not working because of that. “Didn’t we build microservices to avoid that?” – this is the question asked by scary voices in Dave’s head every day.

Dave already proposed to use OpenAPI for generating HTTP server responses and clients. But he shortly found that he still can return invalid data from the API.

It doesn’t matter if it (already) sounds familiar to you or not. The solution for Dave’s company is simple and straightforward to implement. You can easily achieve robust contracts between your services by using gRPC.

The way of how servers and clients are generated from gRPC is way much stricter than OpenAPI. It’s needless to say that it’s infinitely better than the OpenAPI’s client and server that are just copying the structures.

Note

It’s important to remember that gRPC doesn’t solve the data quality problems. In other words – you can still send data that is not empty, but doesn’t make sense.

It’s important to ensure that data is valid on many levels, like the robust contract, contract testing, and end-to-end testing.

Another important “why” may be performance. You can find many studies that gRPC may be even 10x faster than REST. When your API is handling millions of millions of requests per second, it may be a case for cost optimization. In the context of applications like Wild Workouts, where traffic may be less than 10 requests/sec, it doesn’t matter.

To be not biased in favor of using gRPC, I tried to find any reason not to use it for internal communication. I failed here:

- the entry point is low,

- adding a gRPC server doesn’t need any extra infrastructure work – it works on top of HTTP/2,

- it workis with many languages like Java, C/C++, Python, C#, JS, and more,

- in theory, you are even able to use gRPC for the frontend communication (I didn’t test that),

- it’s “Goish” – the compiler ensures that you are not returning anything stupid.

Sounds promising? Let’s verify that with the implementation in Wild Workouts!

Note

This is not just another article with random code snippets.

This post is part of a bigger series where we show how to build Go applications that are easy to develop, maintain, and fun to work with in the long term. We are doing it by sharing proven techniques based on many experiments we did with teams we lead and scientific research.

You can learn these patterns by building with us a fully functional example Go web application – Wild Workouts.

We did one thing differently – we included some subtle issues to the initial Wild Workouts implementation. Have we lost our minds to do that? Not yet. 😉 These issues are common for many Go projects. In the long term, these small issues become critical and stop adding new features.

It’s one of the essential skills of a senior or lead developer; you always need to keep long-term implications in mind.

We will fix them by refactoring Wild Workouts. In that way, you will quickly understand the techniques we share.

Do you know that feeling after reading an article about some technique and trying implement it only to be blocked by some issues skipped in the guide? Cutting these details makes articles shorter and increases page views, but this is not our goal. Our goal is to create content that provides enough know-how to apply presented techniques. If you did not read previous articles from the series yet, we highly recommend doing that.

We believe that in some areas, there are no shortcuts. If you want to build complex applications in a fast and efficient way, you need to spend some time learning that. If it was simple, we wouldn’t have large amounts of scary legacy code.

Here’s the full list of 14 articles released so far.

The full source code of Wild Workouts is available on GitHub. Don’t forget to leave a star for our project! ⭐

Generated server

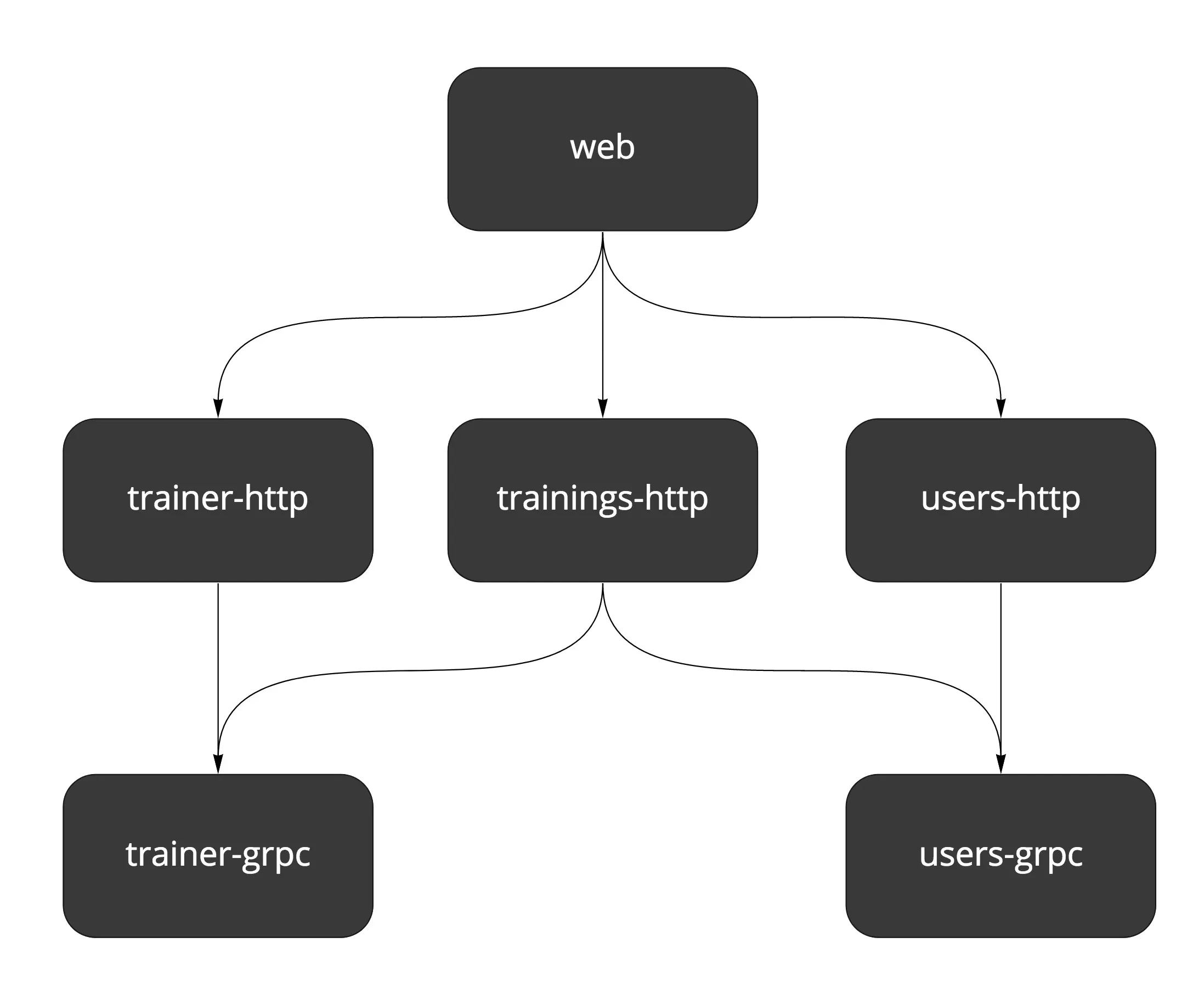

Currently, we don’t have a lot of gRPC endpoints in Wild Workouts. We can update trainer hours availability and user training balance (credits).

Let’s check Trainer gRPC service.

To define our gRPC server, we need to create trainer.proto file.

syntax = "proto3";

package trainer;

import "google/protobuf/timestamp.proto";

service TrainerService {

rpc IsHourAvailable(IsHourAvailableRequest) returns (IsHourAvailableResponse) {}

rpc UpdateHour(UpdateHourRequest) returns (EmptyResponse) {}

}

message IsHourAvailableRequest {

google.protobuf.Timestamp time = 1;

}

message IsHourAvailableResponse {

bool is_available = 1;

}

message UpdateHourRequest {

google.protobuf.Timestamp time = 1;

bool has_training_scheduled = 2;

bool available = 3;

}

message EmptyResponse {}

The .proto definition is converted into Go code by using Protocol Buffer Compiler (protoc).

.PHONY: proto

proto:

protoc --go_out=plugins=grpc:internal/common/genproto/trainer -I api/protobuf api/protobuf/trainer.proto

protoc --go_out=plugins=grpc:internal/common/genproto/users -I api/protobuf api/protobuf/users.proto

Note

To generate Go code from .proto you need to install protoc and protoc Go Plugin.

A list of supported types can be found in Protocol Buffers Version 3 Language Specification. More complex built-in types like Timestamp can be found in Well-Known Types list.

This is how an example generated model looks like:

type UpdateHourRequest struct {

Time *timestamp.Timestamp `protobuf:"bytes,1,opt,name=time,proto3" json:"time,omitempty"`

HasTrainingScheduled bool `protobuf:"varint,2,opt,name=has_training_scheduled,json=hasTrainingScheduled,proto3" json:"has_training_scheduled,omitempty"`

Available bool `protobuf:"varint,3,opt,name=available,proto3" json:"available,omitempty"`

XXX_NoUnkeyedLiteral struct{} `json:"-"`

// ... more proto garbage ;)

}

And the server:

type TrainerServiceServer interface {

IsHourAvailable(context.Context, *IsHourAvailableRequest) (*IsHourAvailableResponse, error)

UpdateHour(context.Context, *UpdateHourRequest) (*EmptyResponse, error)

}

The difference between HTTP and gRPC is that in gRPC we don’t need to take care of what we should return and how to do that. If I would compare the level of confidence with HTTP and gRPC, it would be like comparing Python and Go. This way is much more strict, and it’s impossible to return or receive any invalid values – compiler will let us know about that.

Protobuf also has built-in ability to handle deprecation of fields and handling backward-compatibility. That’s pretty helpful in an environment with many independent teams.

Note

Protobuf vs gRPC

Protobuf (Protocol Buffers) is Interface Definition Language used by default for defining the service interface and the structure of the payload.

Protobuf is also used for serializing these models to binary format.

You can find more details about gRPC and Protobuf on gRPC Concepts page.

Implementing the server works in almost the same way as in HTTP generated by OpenAPI – we need to implement an interface (TrainerServiceServer in that case).

type GrpcServer struct {

db db

}

func (g GrpcServer) IsHourAvailable(ctx context.Context, req *trainer.IsHourAvailableRequest) (*trainer.IsHourAvailableResponse, error) {

timeToCheck, err := grpcTimestampToTime(req.Time)

if err != nil {

return nil, status.Error(codes.InvalidArgument, "unable to parse time")

}

model, err := g.db.DateModel(ctx, timeToCheck)

if err != nil {

return nil, status.Error(codes.Internal, fmt.Sprintf("unable to get data model: %s", err))

}

if hour, found := model.FindHourInDate(timeToCheck); found {

return &trainer.IsHourAvailableResponse{IsAvailable: hour.Available && !hour.HasTrainingScheduled}, nil

}

return &trainer.IsHourAvailableResponse{IsAvailable: false}, nil

}

As you can see, you cannot return anything else than IsHourAvailableResponse, and you can always be sure that you will receive IsHourAvailableRequest.

In case of an error, you can return one of predefined error codes.

They are more up-to-date nowadays than HTTP status codes.

Starting the gRPC server is done in the same way as with HTTP server:

server.RunGRPCServer(func(server *grpc.Server) {

svc := GrpcServer{firebaseDB}

trainer.RegisterTrainerServiceServer(server, svc)

})

Internal gRPC client

After our server is running, it’s time to use it. First of all, we need to create a client instance.

trainer.NewTrainerServiceClient is generated from .proto.

type TrainerServiceClient interface {

IsHourAvailable(ctx context.Context, in *IsHourAvailableRequest, opts ...grpc.CallOption) (*IsHourAvailableResponse, error)

UpdateHour(ctx context.Context, in *UpdateHourRequest, opts ...grpc.CallOption) (*EmptyResponse, error)

}

type trainerServiceClient struct {

cc grpc.ClientConnInterface

}

func NewTrainerServiceClient(cc grpc.ClientConnInterface) TrainerServiceClient {

return &trainerServiceClient{cc}

}

To make generated client work, we need to pass a couple of extra options. They will allow handling:

- authentication,

- TLS encryption,

- “service discovery” (we use hardcoded names of services provided by Terraform via

TRAINER_GRPC_ADDRenv).

import (

// ...

"github.com/ThreeDotsLabs/wild-workouts-go-ddd-example/pkg/internal/genproto/trainer"

// ...

)

func NewTrainerClient() (client trainer.TrainerServiceClient, close func() error, err error) {

grpcAddr := os.Getenv("TRAINER_GRPC_ADDR")

if grpcAddr == "" {

return nil, func() error { return nil }, errors.New("empty env TRAINER_GRPC_ADDR")

}

opts, err := grpcDialOpts(grpcAddr)

if err != nil {

return nil, func() error { return nil }, err

}

conn, err := grpc.Dial(grpcAddr, opts...)

if err != nil {

return nil, func() error { return nil }, err

}

return trainer.NewTrainerServiceClient(conn), conn.Close, nil

}

After our client is created we can call any of its methods.

In this example, we call UpdateHour while creating a training.

package main

import (

// ...

"github.com/pkg/errors"

"github.com/golang/protobuf/ptypes"

"github.com/ThreeDotsLabs/wild-workouts-go-ddd-example/pkg/internal/genproto/trainer"

// ...

)

type HttpServer struct {

db db

trainerClient trainer.TrainerServiceClient

usersClient users.UsersServiceClient

}

// ...

func (h HttpServer) CreateTraining(w http.ResponseWriter, r *http.Request) {

// ...

timestamp, err := ptypes.TimestampProto(postTraining.Time)

if err != nil {

return errors.Wrap(err, "unable to convert time to proto timestamp")

}

_, err = h.trainerClient.UpdateHour(ctx, &trainer.UpdateHourRequest{

Time: timestamp,

HasTrainingScheduled: true,

Available: false,

})

if err != nil {

return errors.Wrap(err, "unable to update trainer hour")

}

// ...

}

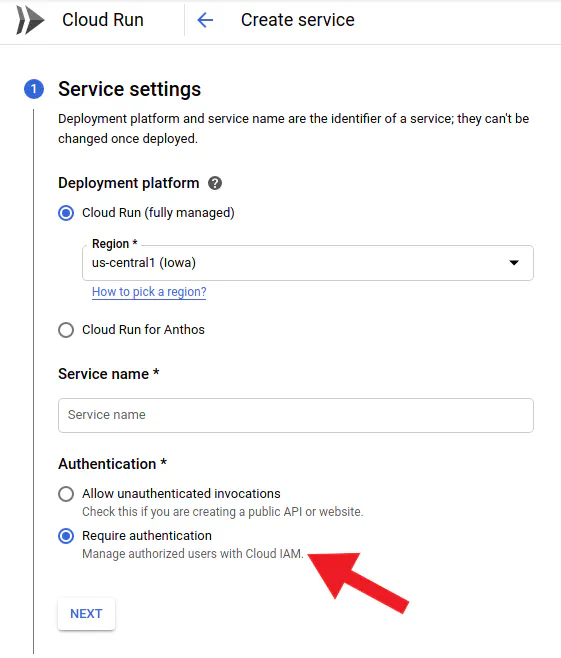

Cloud Run authentication & TLS

Authentication of the client is handled by Cloud Run out of the box.

You need to also grant the roles/run.invoker role to service's service account.

The simpler (and recommended by us) way is using Terraform. Miłosz described it in details in the previous article.

One thing that doesn’t work out of the box is sending authentication with the request. Did I already mention that standard gRPC transport is HTTP/2? For that reason, we can just use the old, good JWT for that.

To make it work, we need to implement google.golang.org/grpc/credentials.PerRPCCredentials interface.

The implementation is based on the official guide from Google Cloud Documentation.

type metadataServerToken struct {

serviceURL string

}

func newMetadataServerToken(grpcAddr string) credentials.PerRPCCredentials {

// based on https://cloud.google.com/run/docs/authenticating/service-to-service#go

// service need to have https prefix without port

serviceURL := "https://" + strings.Split(grpcAddr, ":")[0]

return metadataServerToken{serviceURL}

}

// GetRequestMetadata is called on every request, so we are sure that token is always not expired

func (t metadataServerToken) GetRequestMetadata(ctx context.Context, in ...string) (map[string]string, error) {

// based on https://cloud.google.com/run/docs/authenticating/service-to-service#go

tokenURL := fmt.Sprintf("/instance/service-accounts/default/identity?audience=%s", t.serviceURL)

idToken, err := metadata.Get(tokenURL)

if err != nil {

return nil, errors.Wrap(err, "cannot query id token for gRPC")

}

return map[string]string{

"authorization": "Bearer " + idToken,

}, nil

}

func (metadataServerToken) RequireTransportSecurity() bool {

return true

}

The last thing is passing it to the []grpc.DialOption list passed when creating all gRPC clients.

It’s also a good idea to ensure that the our server’s certificate is valid with grpc.WithTransportCredentials.

Authentication and TLS encryption are disabled on the local Docker environment.

func grpcDialOpts(grpcAddr string) ([]grpc.DialOption, error) {

if noTLS, _ := strconv.ParseBool(os.Getenv("GRPC_NO_TLS")); noTLS {

return []grpc.DialOption{grpc.WithInsecure()}, nil

}

systemRoots, err := x509.SystemCertPool()

if err != nil {

return nil, errors.Wrap(err, "cannot load root CA cert")

}

creds := credentials.NewTLS(&tls.Config{

RootCAs: systemRoots,

})

return []grpc.DialOption{

grpc.WithTransportCredentials(creds),

grpc.WithPerRPCCredentials(newMetadataServerToken(grpcAddr)),

}, nil

}

Are all the problems of internal communication solved?

A hammer is great for hammering nails but awful for cutting a tree. The same applies to gRPC or any other technique.

gRPC works great for synchronous communication, but not every process is synchronous by nature. Applying synchronous communication everywhere will end up creating a slow, unstable system. Currently, Wild Workouts doesn’t have any flow that should be asynchronous. We will cover this topic deeper in the next articles by implementing new features. In the meantime, you can check Watermill library, which was also created by us. 😉 It helps with building asynchronous, event-driven applications the easy way.

What’s next?

Having robust contracts doesn’t mean that we are not introducing unnecessary internal communication. In some cases operations can be done in one service in a simpler and more pragmatic way.

It is not simple to avoid these issues. Fortunately, we know techniques that are successfully helping us with that. We will share that with you soon. 😉

**Until then, we still have one article left about our "Too modern application". It will cover Firebase HTTP authentication. After that, we will start the refactoring!** As I mentioned in the [first article](/post/serverless-cloud-run-firebase-modern-go-application/) we already intentionally introduced some issues in Wild Workouts. Are you curious about what is wrong with Wild Workouts? Please let us know in the comments! :wink: See you next week :wave: